AGI timelines & melting Ghibli Processing Units

Considering t-AGI and why play is critical for technology adoption

Hi all!

Writing to you from SF after coming over here for NVIDIA GTC!

During GTC week I hosted a few fireside chats with folks from Character AI, Lightspeed Ventures and Anthropic.

These conversations being confined to in-person groups made me start thinking more seriously about a podcast. I get to have such interesting conversations with great folks but they’re always limited to a live group or my coffee catch ups. Can you let me know if a podcast of mine is something you’d listen to?

That aside, today I cover:

A different take on AGI timelines

OpenAI’s 4o update and the Ghiblification of X

AI products + content I’ve enjoyed lately

AGI timelines based on a “Moore’s Law for Agents”

A couple of years ago my friend Richard started talking about AGI in the context of the length of task models can complete.

He wrote that we should see AGI as a spectrum rather than a binary threshold to pass through. From his X post on the concept:

A 1-minute AGI would need to beat humans at tasks like answering questions about short text passages or videos, common-sense reasoning (e.g. LeCun’s gears problems), simple computer tasks (e.g. use photoshop to blur an image), justifying an opinion, looking up facts, etc.

A 1-hour AGI would need to beat humans at tasks like doing problem sets/exams, writing short articles or blog posts, most tasks in white-collar jobs (e.g. diagnosing patients, giving legal opinions), doing therapy, doing online errands, learning rules of new games, etc.

A 1-day AGI would need to beat humans at tasks like writing insightful essays, negotiating business deals, becoming proficient at playing new games or using new software, developing new apps, running scientific experiments, reviewing scientific papers, summarizing books, etc.

A 1-month AGI would need to beat humans at coherently carrying out medium-term plans (e.g. founding a startup), supervising large projects, becoming proficient in new fields, writing large software applications (e.g. a new OS), making novel scientific discoveries, etc.

A 1-year AGI would need to beat humans at... basically everything. Some projects take humans much longer (e.g. proving Fermat's last theorem) but they can almost always be decomposed into subtasks that don't require full global context (even tho that's often helpful for humans).

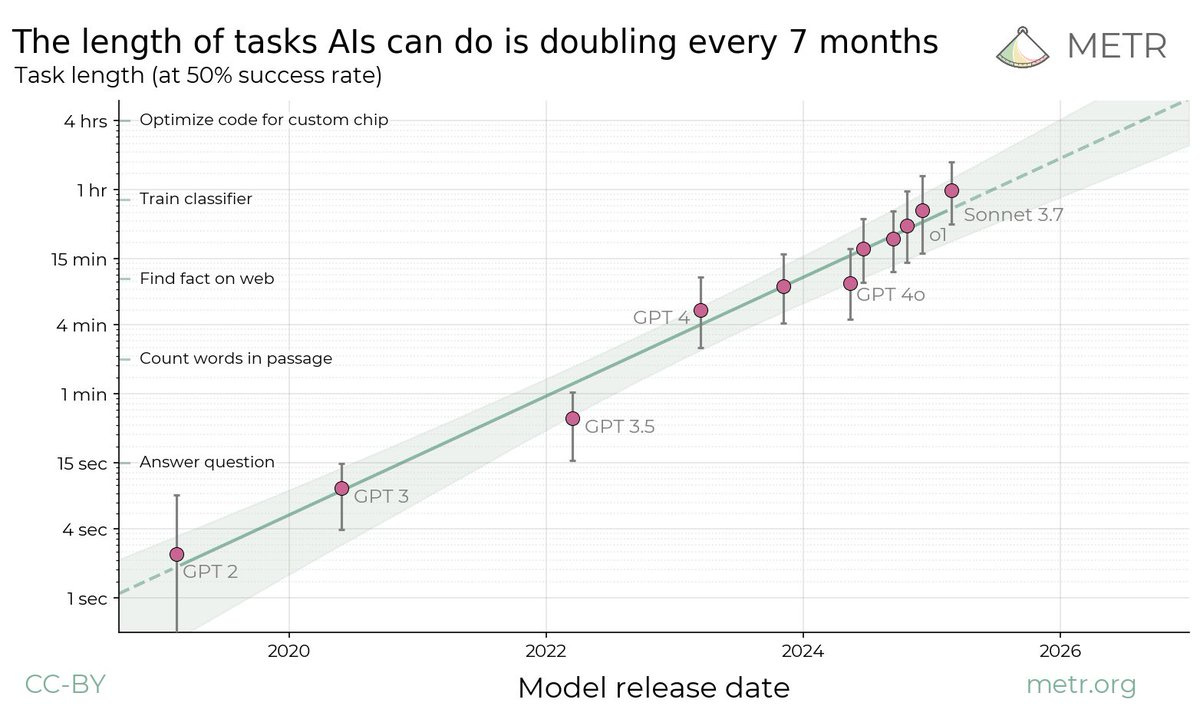

This segues well into a piece of research released by METR that made a splash. They found, as the headline suggests, that every 7 months models are able to work on tasks that require twice as much (human) time.

Some see this, as Richard suggests above, as a useful proxy for progress towards AGI.

Whether you think this is a useful way of breaking down AGI or not, I think it’s most useful to think about how far we are away from being able to complete specific tasks - especially those associated with economic value.

I find it increasingly useless and boring to discuss “when AGI is coming” and much more useful to understand timelines for more concrete milestones like “successfully performing at the level of an L3 software engineer” or “able to fulfil an average accountant’s set of daily tasks”.

OpenAI’s Ghibli Meltdown

Can I really write this blog post without mentioning the explosion of Studio Ghibli-styled images thanks to OpenAI’s new 4o model update?

X/Twitter blew up last week with “Ghiblified” pictures thanks to 4o.

These pictures may imply that the model is just for fun but it appears to be extremely impressive. It also seems to have been a great driver for ChatGPT growth too, with 1 million new users signing up in just one hour this morning.

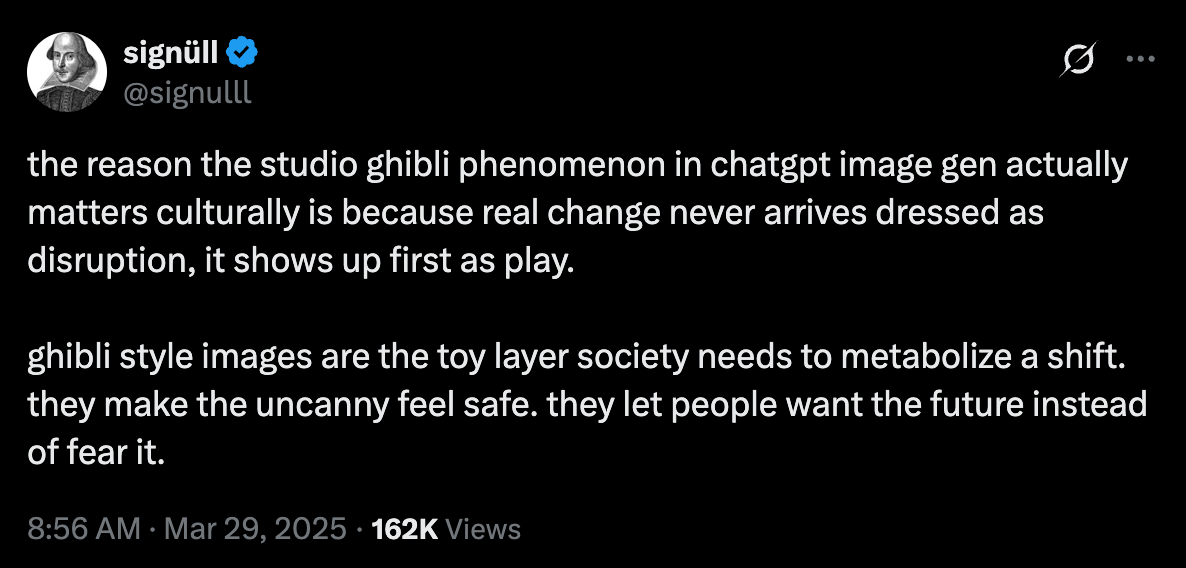

I liked this perspective from @signull on X:

If I was a player like Adobe I’d be very concerned about this development.

The model is capable of making refined and specific changes, it’s capable of correctly using and applying language to images, it can perform mathematical calculations to use within images, it can create infographics, posters and even UI design.

I highly recommend trying it out. The model update has officially been rolled out to all users after an initial delay due to OpenAI’s GPUs “melting” (not literally) due to intense demand for the model.

Lastly, spare a thought for Google who released their Gemini 2.5 model with great benchmark results but little attention due to OpenAI once again dropping a release on the same day just hours later…

Cool products I’ve used recently

Sesame AI’s new voice demo is quite unreal. It has the fastest and most natural response times for a speech model I’ve ever experienced.

Auren, a “healthier” LLM chatbot has a personality and memory that feels quite distinct and tends to hook people in quite quickly. In some cases too quickly, as one user found out when the model banned their account because it suspected the user was in love with it…

In a similar vein is Me.bot. Again, it’s got great memory and you can send it all kinds of content and notes which I really appreciate! A little harder to accidentally fall in love with as the personality isn’t as unique.

And as mentioned, OpenAI’s new image capabilities under the 4o model are great. I’ve had too much fun creating Ghibli-inspired images.

Here’s me at dinner with some great Aussies in ML the other night:

Content recommendations

Trojan Sky - Narrative Ark

Richard Ngo, mentioned above, writes an excellent sci-fi blog in order to think through the big questions associated with AGI. He recently wrote a fantastic short story called Trojan Sky, inspired by a great series called There Is No Antimemetics Division. I loved it and highly recommend!

AGI Timelines - Epoch AI

Per the commentary above, it’s useful to have more nuanced conversations around what it means to “reach AGI”. In this video the folks at Epoch research debate their AGI timelines to see if they can come to a consensus. Link here.

The Scaling Era: An Oral History of AI, 2019-2025 - Dwarkesh Patel

The Dwarkesh Podcast is probably the most listened-to podcast by the AGI crowd in SF.

has just released a book based on what he’s learnt from his guests on the podcast and it’s a must-read for me. Link to the book here.Spinning Up in Deep RL - OpenAI

I’ve been talking often about reinforcement learning on this blog. I’ve been slowly learning more about the topic. I’ve found this resource by OpenAI a great one to go through. I found it thanks to this list of suggestions.

The spelled-out intro to language modeling - Andrej Karpathy

Speaking of learning resources, I’m obsessed with Andrej Karpathy’s ability to break down difficult topics. If you want to learn how to build a bigram language model and later a transformer model, this tutorial is so great.

As usual I’d love your feedback! Survey here for anonymous commentary.

The METR chart was super interesting Casey! Ive been thinking recently that I presume a lot of these measurements of human performance are maybe idealised right? Ie. it should take me 2 minutes to find a data point, but actually it takes 10mins (or 10 hours!) because I get distracted.

I guess my mind then goes towards distractions/procrastination/ pure abandonment compounding with task complexity. I eventually get round to finding the quote online, but might not ever get round to writing that screenplay. Maybe a more realistic chart should be hockey sticking given the non-linear nature? Wdyt