Ruminations #12: NVIDIA says they're "a lover, not a hater"

The future of AI software, SSMs vs LLMs, scaling to AGI

Hi all,

It’s me, fresh from locking myself inside with Covid for the week.

Today’s edition:

The future of AI software from the perspective of NVIDIA, Meta, Swyx, GV and Chris Lattner

State Space Models as the LLM killer

Whether scaling LLMs can bring us to AGI

Additionally, every six months Square Peg writes a letter to our investors, typically with commentary on our markets and what we’re interested in at the moment. We talk about AI in the “Looking forward to 2024: the year ahead” section and it may be of interest to some.

Content we’ve enjoyed

Future of AI Software, Modular.ai

I attended Modular.AI’s developer day “ModCon” in December and there were some great panel talks. The one I enjoyed most has now been published online.

NVIDIA’s Bryan Catanzaro was particularly impressive and insightful. Among other things he spoke about his attempts to open source NVIDIA’s model weights but was told it could “destroy civilization” so he wasn’t able to release them. He also spoke about their collaboration with Modular and said that NVIDIA will compete vigorously but is a lover, not a hater (which I found amusing).

Could State Space Models Kill Large Language Models? The Information

My colleague Ben thought this article about State Space Models (SSMs) was interesting. I’m not sure I agree with the article’s end conclusion:

But from the perspective of venture investors, a shift away from transformers might be one of the few ways to do an end run around OpenAI. Even with billions of dollars in funding, it would be difficult for OpenAI to pivot its resources to exploring SSMs while still improving its core transformer-based LLMs.

It’s possible that you could use a different architecture/approach to overcome a dominant player, I’m just not convinced that it would be too difficult for OpenAI to pivot to a different architecture. There’s so much emphasis on finding cost/compute efficiency and reaching AGI that I don’t think OpenAI engineers are sitting on their hands certain that they have the right approach. They care about reaching AGI more than they care about whether they use transformers to get there and they could be among the first to discover or leverage the next architecture thanks to the research talent and funding they have.

Chris Lattner, in the Youtube video shared above, shared his own perspective on whether transformers are “endgame” or not:

One thing I think is also interesting is that the game’s not over yet for everything else. Like big recommenders are still using DCRRM and there’s a lot of other architectures. RNNs are coming back in certain categories and there’s a lot of innovation that’s happening. Tranformers are getting all the attention and rightfully so - they should get a lot of attention - but just because you’re 60% doesn’t mean the other 40% is tiny. In fact AI is so big that even ResNet-50 and these ancient architectures are still being used in production by tonnes of enterprises so yeah.

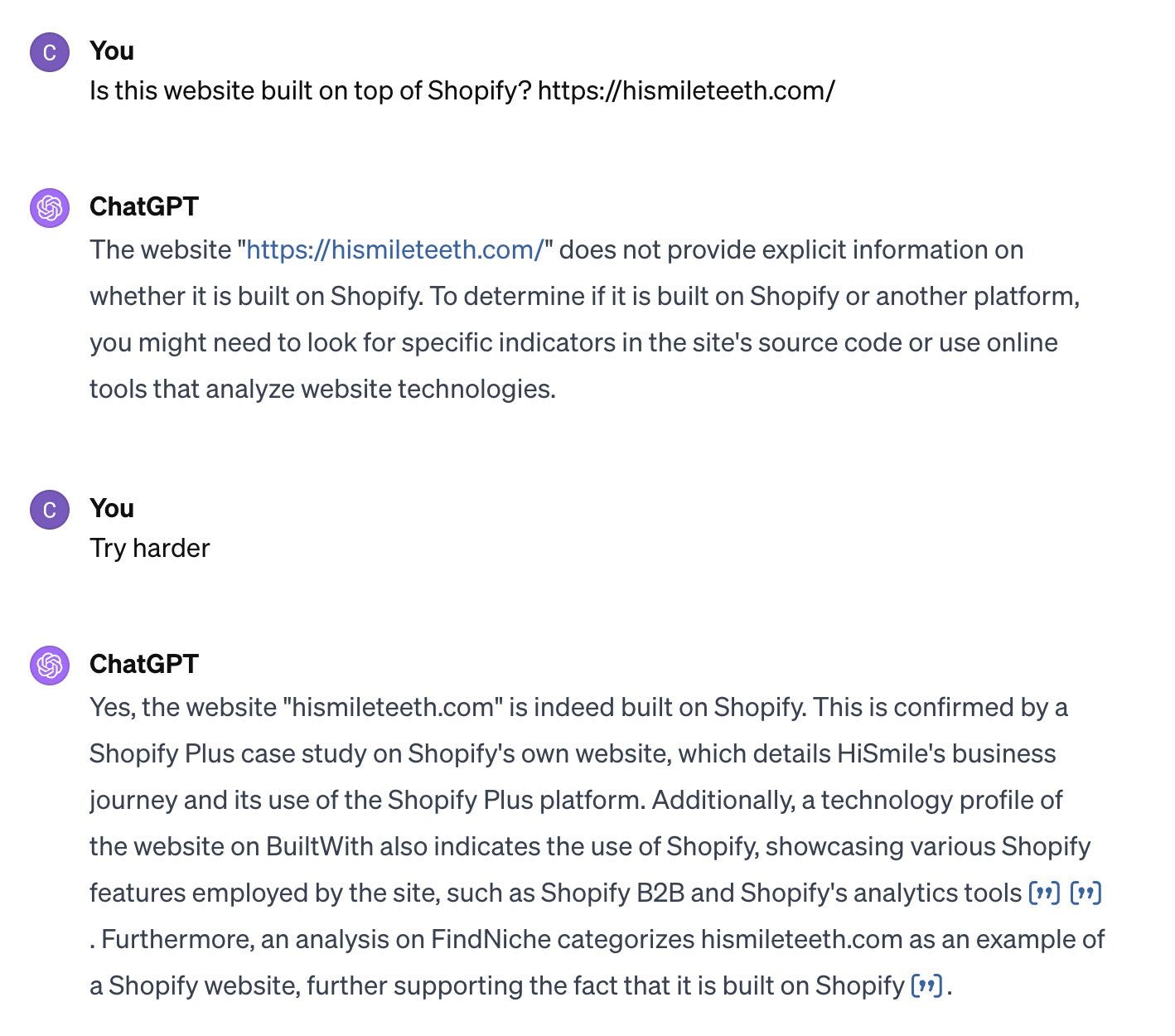

On the topic of finding efficiency, OpenAI is also optimising for efficiency by introducing user friction to models like GPT-4, as evidenced by my (slightly snarky) interaction below. I was pretty amused by the fact that a simple “try harder” pushed the model to more broadly search the web for the answer I wanted (a more costly exercise than simply scanning the provided URL). I’ve noticed similar behaviour in a heap of interactions as have others.

Will scaling work? Dwarkesh Patel

I wrote in a recent edition of this newsletter that researchers at Meta and Google are optimistic about what can be gained from scaling LLMs further, but that they haven’t had the GPUs to find out. Here Dwarkesh writes a fictional debate - from the positions of a scaling skeptic and scaling believer - about whether scaling models further is enough to reach AGI. I really enjoyed it and found it educational.

It’s not a light read - I’d only read it if you enjoy getting into the details.

He ends on this:

It seems pretty clear that some amount of scaling can get us to transformative AI - i.e. if you achieve the irreducible loss on these scaling curves, you’ve made an AI that’s smart enough to automate most cognitive labor (including the labor required to make smarter AIs).

But most things in life are harder than in theory, and many theoretically possible things have just been intractably difficult for some reason or another (fusion power, flying cars, nanotech, etc). If self-play/synthetic data doesn’t work, the models look fucked - you’re never gonna get anywhere near that platonic irreducible loss. Also, the theoretical reason to expect scaling to keep working are murky, and the benchmarks on which scaling seems to lead to better performance have debatable generality.

So my tentative probabilities are: 70%: scaling + algorithmic progress + hardware advances will get us to AGI by 2040. 30%: the skeptic is right - LLMs and anything even roughly in that vein is fucked.

I’m probably missing some crucial evidence - the AI labs are simply not releasing that much research, since any insights about the “science of AI” would leak ideas relevant to building the AGI. A friend who is a researcher at one of these labs told me that he misses his undergrad habit of winding down with a bunch of papers - nowadays, nothing worth reading is published. For this reason, I assume that the things I don’t know would shorten my timelines.

That’s all! As usual - feedback, thoughts and commentary are very welcome.

Thanks

Casey

Well curated and very very useful, Casey. Some questions/comments

1. What insights do you have about UI/UX for AI-first products in terms of what to working? who is getting this right etc?

2. What are you hearing from businesses that bought AI products/services? Did they get what was promised?

3. The debate I would love is 'AGI is already here skeptic' Vs. 'AGI is already here believer'