Hi all!

I’m writing this from a cafe in SF, where I’ve been spending plenty of time with folks at the frontier of AI.

A couple of nights after I landed in San Francisco, I was at a Halloween party when a friend at OpenAI mentioned that “AI winter is coming” before walking away from the conversation without explanation.

It made for a good Halloween scare! I was like:

They deftly avoided my follow up questions about why. It was understandable given how hush-hush everything is at the frontier AGI players.

I then asked a credible researcher friend at DeepMind about this comment, and they said that they disagreed but that it was clear scaling was getting harder and harder but that they felt that more reinforcement learning (RL) could be the saving grace (more on this below).

About a week later, Marc Andreessen was quoted saying:

"We've really slowed down in terms of the amount of improvement... we're increasing GPUs, but we're not getting the intelligence improvements, at all"

Two weeks later, quotes from a Reuters article sent ML Twitter (X) into a spin:

Ilya Sutskever, co-founder of AI labs Safe Superintelligence (SSI) and OpenAI, told Reuters recently that results from scaling up pre-training - the phase of training an AI model that uses a vast amount of unlabeled data to understand language patterns and structures - have plateaued.

…

Behind the scenes, researchers at major AI labs have been running into delays and disappointing outcomes in the race to release a large language model that outperforms OpenAI’s GPT-4 model, which is nearly two years old, according to three sources familiar with private matters.

For a while I’ve been saying that the next set of big model releases could be an important moment for whether the hype around AI continues to build, or sizzles out.

The capital invested into the largest AI rounds has been underpinned by the belief that more capital = more scale = orders of magnitude greater AI capabilities = the ability to create huge amounts of value.

If more scale no longer equals leaps in AI capabilities, the equation above breaks and we’re back into a world of focussing on research breakthroughs instead of trying to find inputs (like compute and data) to feed resource-hungry models.

What might this mean for founders and people in the space?

Mega-rounds for AI companies working towards AGI may become even rarer

The gravy-train of cheap access to massive models might end (or large models may become even cheaper! See below)

The model layer may become even more commoditised and value/differentiation moves upwards in the stack (developer tools, enablement, app layer etc)

Investors may become more bearish on funding AI in general

Regulators may lose interest in running towards AI regulation

Hyperscalers may offer fewer credits and less support for AI startups (the offerings are large right now!)

The path to AGI may become more unpredictable, if we can’t rely on scaling because we may need to wait for research breakthroughs (NB: many researchers I’ve spoken to have never believed in scaling alone as a path, but some absolutely do)

There is some good news for founders, in my view:

I and plenty of others believe that there’s still value to be squeezed from foundation models in their current state:

Even with current capabilities there are great businesses to be built

The intensity of competition (from all angles: new entrants, industry incumbents and hyperscalers) may decrease if there’s less funding being thrown at AI startups and enterprise AI initiatives

NB: this doesn’t mean competition in general wont be fierce, but less capital means less fuel on the fire of competition and also less irrational spending

The meme of OpenAI wiping out huge numbers of startups with each release may become less true as we struggle to make models more generalisable

The re-orientation towards research-based performance improvements could mean cheaper models as talent and capital would be directed towards finding efficiencies and new ways to do more with less

HOWEVER! We don’t know if progress truly is stalling - foundation model builders may be keeping their cards close to their chest. This is just me reflecting on comments I’ve heard from friends in SF. I’m still optimistic and excited either way: there’s plenty of value to be extracted yet and AI value can come from much more than just transformer models!.

Speaking to investors in the leading players (OpenAI, Anthropic, SSI, Perplexity etc) they say that the concern around model scaling hitting a wall is “based on old data” and “just noise”. They also say that the best breakthroughs are being guarded closely and not ready for release. While I trust their judgement, it’s worth noting that both foundation model companies and their investors have a vested interest in continuing to tell people that scaling is yielding great results.

Foundation models need to project success & progress to keep raising mega rounds and their investors could risk upsetting their capital providers (Limited Partners) if their billions went to NVIDIA GPUs instead of any enterprise value.

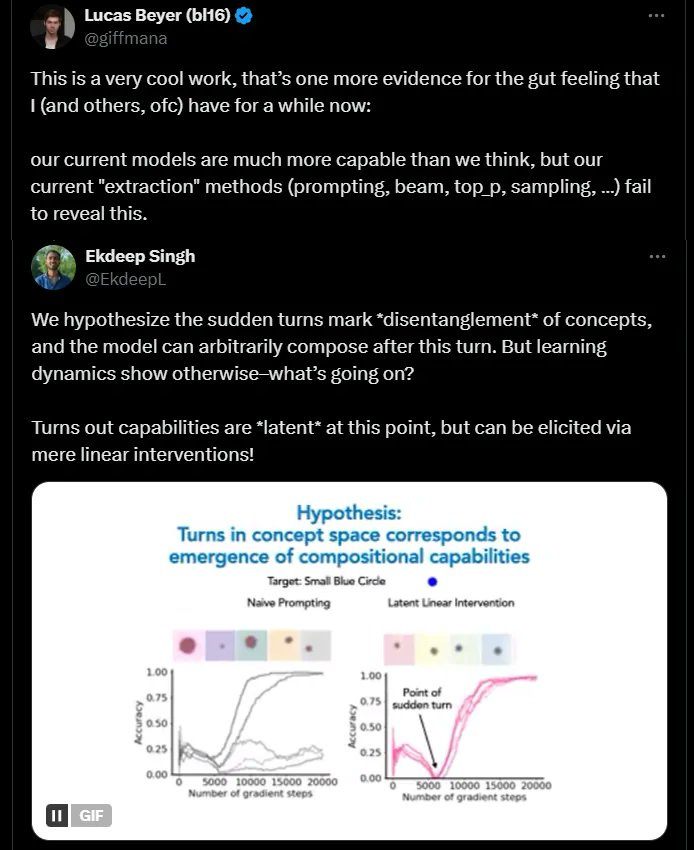

Researchers I speak to at the largest players still seem to be believers in scaling, but acknowledge it’s getting harder and believe that reinforcement learning is where to focus, so I think we can expect to see more references to reinforcement learning in future launches.

Many refer to OpenAI’s o1 (known also by its codename “Strawberry”) as a clear example of how RL can squeeze more value out of models without making them much larger. There is some great commentary about that in this blog post.

They also believe RL might be key for overcoming data constraints. Data constraints are a meaningful problem at this stage; as Björn Ommer shared this time last year:

For every doubling of model size, the training data set should also be doubled. This means we may run out of data.

I’m not going to go into detail here, but RL may prove useful for “self-play” where models generate synthetic data or take actions that lead to further data generation by humans. I personally believe initiatives around computer interface tools, like OpenAI’s upcoming “Operator” are part of strategies to generate more data through reinforcement learning. Same with initiatives around creating in-game agents like those that can play Minecraft.

Anyway, that’s enough from me for now.

As always, love to hear your feedback!

Best,

Casey