Ruminations #4: hype and understanding immature technologies

A misc collection of content reflections

Hi all,

Happy Monday!

Today’s share is a mish-mash of content the team has enjoyed. A couple of things before jumping in:

I’m in Brisbane from Wednesday morning to Friday afternoon. If you’re working or building in AI in Brisbane, or know someone who is, I have some spare time on Wednesday for catch-ups. Let me know if you’d like to grab a coffee (or green tea in my case).

My colleagues and I have been meeting AI companies that are building products for developers, either to make their roles as developers easier with AI or to make certain workflows easier when working with gen AI. If that sounds like the kind of business you’re working on I’d love to chat and hopefully share some of our learnings, too.

Interesting content

Some great recommendations were shared around the Square Peg team this week.

Lucy Tan really enjoyed this collection of articles called the "Anti-hype LLM reading list". A few of her highlights:

It’s hard to make something production-grade with LLMs because of flexible inputs from the user and inconsistent + ambiguous outputs from the LLM.

As a result, a lot of what's out there is 'demo bullshit' (using the author's words!) that falls over the instant anyone tries to use it for a real task.

An LLM is not a product but an engine for features. Thin wrappers will disappear in 6, 12, 18 months etc. as the foundational models become more robust.

Chat is the wrong interface for most use cases (I personally feel strongly about this as a user who hates staring at a blank slate!). Good tools make it clear how they should and shouldn't be used but with chat, the burden is on the user to figure out what works.

The ones I liked most in the reading list were: Building LLMs for production, All the hard stuff nobody talks about when building products with LLMs, Why chatbots are not the future

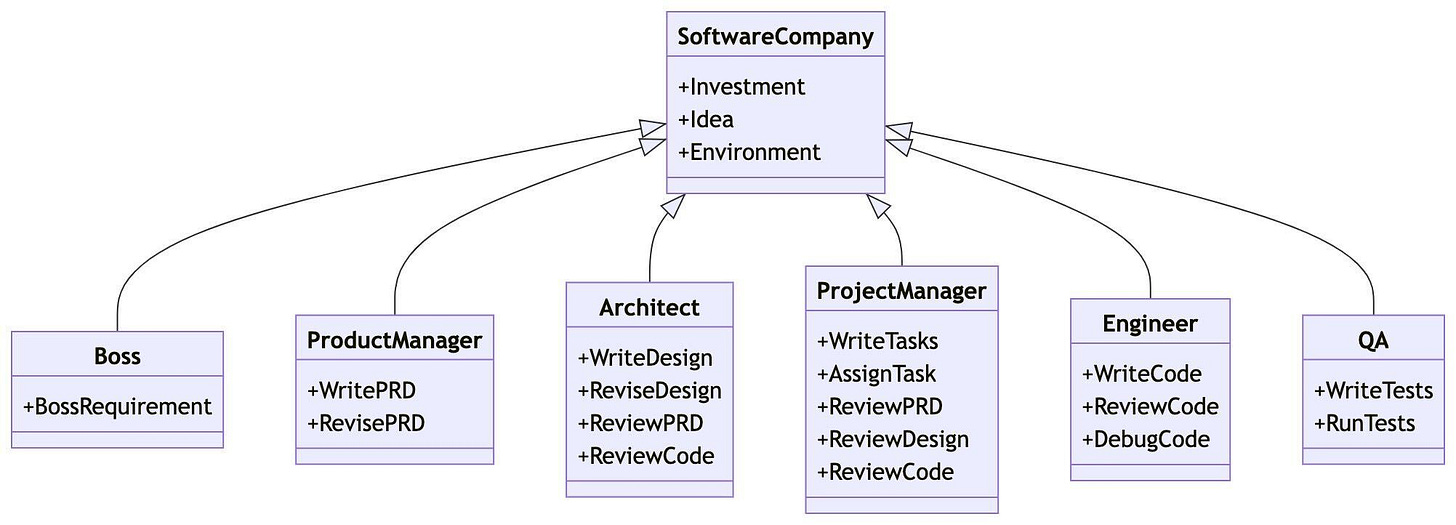

Ed Barker shared the MetaGPT GitHub repository and wrote:

This is one of the fastest growing libraries on GitHub right now.

Why? This is in some ways an open source incarnation of a business we met recently and allows you to spin up a full stack engineering team made up of AI agents - allowing you to code up a product with a single line prompt (though more back-end focused). It sits straight on top of GPT4. For me the consistent theme that seems to be playing out is the tech getting increasingly commoditised and understanding of the problem to be solved / product / G2M being where value comes from.

Tony Holt shared a recent Stratechery interview with Nat Friedman and Daniel Gross on the AI hype cycle, with the comment:

I found this interview super interesting: there was a lot of discussion as to why: why would large companies be created? In which area? Also a lot of historical analogising to the PC, to the internet era and discussing which companies created value and when they created it relative to those big breakthrough technologies.

One other thing I took from the interview is the competition and dynamics in the GPU space. [It’s] clearly driving NVIDIA but also so interesting to see what these 2 guys are doing in terms of essentially owning a cloud service (GPU clusters). Reason being there’s such a difficult path to accessing without owning and for early stage businesses the cost of ownership is prohibitively high. So these two guys (and now many other VCs) are providing clusters as a service - pretty high cost / high value add service for early stage AI businesses (they are recovering cost from customers, but there is a high capital outlay).

The Batch Newsletter Pre-Amble

I like to read DeepLearning.AI’s newsletter, The Batch. In the most recent update, Andrew Ng wrote a short pre-amble about the value of going a layer or two deeper in technical understanding of LLMs, given the technology is still immature.

I found this interesting both from the perspective of founders and my role as an investor. It’s relevant to my role as an investor because I don’t believe investors need to attempt to become overnight experts to invest in AI, but there is a balance to strive for in understanding.

It’s relevant to founders because of the reasons Andrew outlines in the extract quoted below. While I like the points made, I worry that founders who over-focus on the AI aspects of their product risk could missing the forest for the trees: In most cases, I see AI as just another tool in the toolkit of building a great product, not the entire toolkit.

In this respect, there’s an anecdote I often lean on from one of our AI portfolio companies. Several years ago, most of their industry competitors felt that competitive advantage would come from having the most accurate models, given they’re in healthcare and accuracy is paramount. This founder’s unique insight (unique at the time!) was that most of these models would end up at roughly similar accuracy and that they should focus their efforts on building a strong GTM motion and exceptional product experience. This team has, of course, still had a focus on technical excellence in AI, but they didn’t over-focus on it. It’s been a winning strategy for them.

I don’t believe that Andrew is suggesting founders should go so deep that they forget about business-building; my comment is tangential to what he’s written and more based on observations I’ve made on what founders have been focussing on when they speak to me.

Here’s Andrew’s pre-amble, shortened slightly for brevity:

Machine learning development is an empirical process. It’s hard to know in advance the result of a hyperparameter choice, dataset, or prompt to a large language model (LLM). You just have to try it, get a result, and decide on the next step. Still, understanding how the underlying technology works is very helpful for picking a promising direction. For example, when prompting an LLM, which of the following is more effective?

Prompt 1: [Problem/question description] State the answer and then explain your reasoning.

Prompt 2: [Problem/question description] Explain your reasoning and then state the answer.

These two prompts are nearly identical, and the former matches the wording of many university exams. But the second prompt is much more likely to get an LLM to give you a good answer. Here’s why: An LLM generates output by repeatedly guessing the most likely next word (or token). So if you ask it to start by stating the answer, as in the first prompt, it will take a stab at guessing the answer and then try to justify what might be an incorrect guess. In contrast, prompt 2 directs it to think things through before it reaches a conclusion. This principle also explains the effectiveness of widely discussed prompts such as “Let’s think step by step.”

…

That’s why it’s helpful to understand, in depth, how an algorithm works. And that means more than memorizing specific words to include in prompts or studying API calls. These algorithms are complex, and it’s hard to know all the details. Fortunately, there’s no need to. After all, you don’t need to be an expert in GPU compute allocation algorithms to use LLMs. But digging one or two layers deeper than the API documentation to understand how key pieces of the technology work will shape your insights. For example, in the past week, knowing how long-context transformer networks process input prompts and how tokenizers turn an input into tokens shaped how I used them.

A deep understanding of technology is especially helpful when the technology is still maturing. Most of us can get a mature technology like GPS to perform well without knowing much about how it works. But LLMs are still an immature technology, and thus your prompts can have non-intuitive effects. Developers who understand the technology in depth are likely to build more effective applications, and build them faster and more easily, than those who don't. Technical depth also helps you to decide when you can’t tell what’s likely to work in advance, and when the best approach is to try a handful of promising prompts, get a result, and keep iterating.

That’s all! As usual, I would love to hear your perspectives.

Thanks

Casey

Lucy's insights from the Anti-Hype LLM reading list are spot on. The author's point about - - a lot of what's out there is 'demo bullshit', is so true. It feels like a new breed of snake oil salesmen promising all sorts of outcomes and the folks signing up have no way of validating any of those claims.