Ruminations #6: AGI before 2030?

Will AGI make decentralisation a need, instead of a nice to have?

Hi all,

This week I share notes from two talks at the QLD AI Summit, annotated with comments from my conversations with the speakers and my thoughts. I also share content the Square Peg team and I have enjoyed lately.

This is a long one. To make it easier there are links in the section below to specific comments you might find interesting.

As usual, I’d love to hear your thoughts and feel free to share this with anyone you think may enjoy it.

Notes from Jeremy Howard of Fast.AI and Zack Kass, formerly Head of GTM at OpenAI

Last week I was in Brisbane meeting more great folks working in AI and I also attended the QLD AI Summit. Props to the QLD AI Hub - it was a really well-designed event and I highly encourage people to go next year. It was awesome to see 600 (!) people show up in Brisbane for the event.

I really enjoyed both Jeremy Howard’s Q&A and Zack Kass’ keynote. I took near-verbatim notes of Zack’s talk, but they’re not perfect notes so assume everything is paraphrased.

It’s worth mentioning that while Zack appeared to be representing OpenAI he has recently left the company and so his views are not necessarily sanctioned by OpenAI (as typically someone speaking on a company’s behalf would need to stick to comms-approved talking points).

Nonetheless, many of his comments were interesting to me and I’ve pulled out a series of them that I thought were worth sharing. You can see the full notes here. Given the notes are extensive I have bookmarked certain statements to make it easier to jump to sections that are of interest. You’ll need to request access.

If we were going to have an alignment problem, we’d have had it already

The cost to serve GPT-5 is several orders of magnitude higher than GPT-4

If you attach your identity to your job I have bad news for you.

We have little tolerance for machine-error and that inhibits progress

Sam Altman’s heavy focus on comms and public perception as a risk for OpenAI

Jeremy Howard shared that he thinks the focus on bigger models and more compute has been a mistake. The deployment of these models - training, inference - has been done badly [Casey: by badly he seemed to mean inefficiently]. He says this makes sense because it’s been a race to get there, meaning technical debt is high. Jeremy has been experimenting with ways to make these things faster.

He also spoke about the state of AI in Australia, about us being behind in AI, how there’s a good deal of short-termism and that universities taking large portions of their PhDs’ IP ownership or company ownership holds back progress.

Casey: I held a small dinner with several senior academics in AI the night before the AI Summit and we spoke about this as an unfortunate aspect of academic entrepreneurship in Australia. I have heard from a good number of founders with research backgrounds coming out of universities in Australia that they had to give up their business because of the severe amount of ownership they took of either the IP or of their business (after taking funding from the university). If anyone has a similar story, I’d like to hear about it so that I can help advocate for better outcomes for founders on this front.

Zack Kass shared that GPT-4 is 100x less expensive than when GPT was launched and that it has gotten 10x cheaper in a year.

He said (again - all paraphrased slightly):

Current trajectory on cost is exciting. The belief is that value in the end doesn’t accrue to the big companies. The rate at which they’re compressing means that open source might eventually win and GPT-4 could run on a single GPU. I bet it would happen in the next 18 months, enabling it to run disconnected from the internet.

Casey: I asked him about this comment that open source will accrue the value of AI because of commoditisation of the models. I think it’s too early to say say confidently that open source will “win” but it’s certainly interesting to speculate. I asked him what it meant for what OpenAI’s product becomes: if the underlying models are commoditised what will it be that OpenAI creates value with? An interface? Developer tools? Something else?

He said likely consumer interfaces. I asked, “what about dev products or API offerings?” and he reaffirmed that consumer products were the vision given the size of problems able to be solved. I’m not sold, but I do know that some I speak to believe that if you believe in AI becoming general enough, then you should believe that you can capture a huge amount of value without needing to offer anything to developers.

We spoke briefly about concerns around a small number of players (or a single player) accruing all of the value of AGI if there are no systems to distribute the value created.

Because of his belief in open source and the ongoing constraints on compute capacity, he said that he expects AGI to look more like the internet: owned by no one and everyone.

I had a similar discussion with Jeremy Howard (Fast.AI) and Alex Long (Amazon) the day before and we spoke about the risk that this power is held by a few and that one answer is a decentralised structure, but it’s not clear that this structure will emerge naturally.

The phrase that comes to mind here for me is Charlie Munger’s “show me the incentive I’ll show you the outcome”. There are ridiculously large incentives for companies to try and own AGI, instead of distributing that value.

According to Zack, AI will happen in four phases:

Enhanced applications (ChatGPT, Photoshop Firefly, Office Co-Pilot)

Enhanced applications connected via API (different AI-empowered applications work together). This will be short but pretty interesting. Will get most of the world using AI.

Multi-modal AI control GUI. Future operating systems have to be inherently AI-based and connect all of your applications in a way that it becomes a lot like the internet and AI is the underpinnings of applications.

Phase 4: AGI. AI can improve itself and will unlock a new level of potential that is faster and better. It will be cool, wild, interstellar travel in our lifetime. AGI can improve itself and will unlock a new level of potential that is faster and better.

Zack was asked about ensuring an equitable distribution of the value derived from AGI in the future:

We may look upon capitalism as some weird part of society. AGI might flip this bit where we focus on something that does better to advance the human condition.

I imagine that UBI rolls out first. Government will figure out how to pay people how to exist. We’ll settle into this new reality where we’ll have more time back and that’ll change this idea of what money and work is.

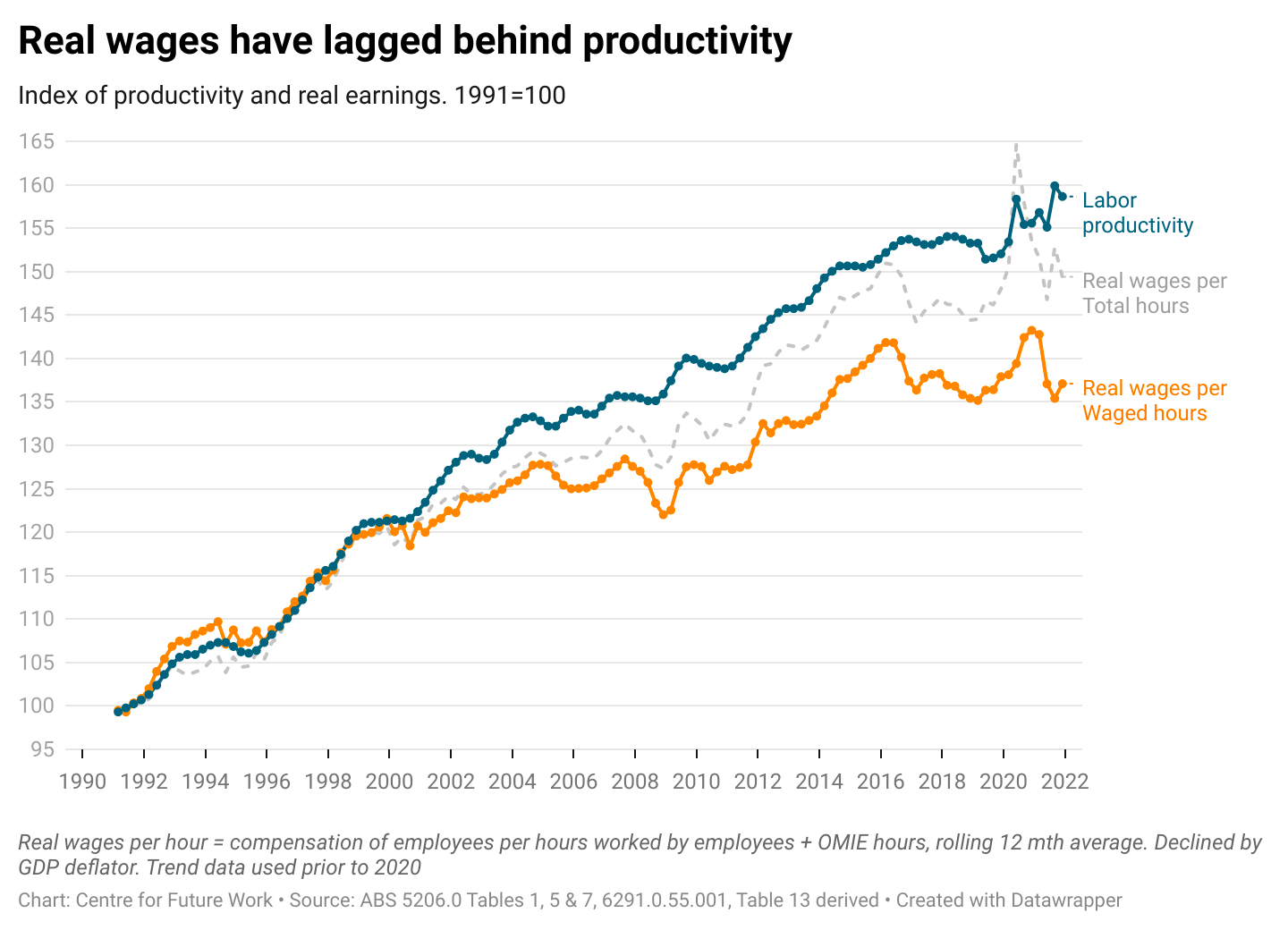

Casey: since the 2000s increases in productivity seem to have been captured by enterprises instead of employees, given the divide between productivity and wages. I’m no economist so I’m not under the details but it makes me wonder if this trend will really stop thanks to AGI, or if the two (wages and productivity) will instead completely decouple. It's hard for me to picture a world in which people don’t look to concentrate wealth instead of distributing it given world history.

The below graph is from the Australia Institute here.

He also spoke about barriers to progress in AI:

Self driving cars could exist today if not for infrastructure and regulations. You can’t tell me that roads are safe because humans drive on them. Roads would be safer with self driving. AGI doesn’t have the same inherent risks yet. Infrastructure and regulations are also the two main risk for reaching AGI.

AI will create all sorts of ways to be bad and it will also create all sorts of ways to fight bad.

We have historically had zero tolerance for machine error. We have so much tolerance for human error. I do think this hesitancy will limit our ability to progress science especially as the pace of technological development increases. I think we need to accept some deaths to some degree in the name of technological change. People will die in interstellar travel and they’ll die due to computer vision. We said no to nuclear because of events like Chernobyl and instead burnt millions of tonnes of coal.

That’s all of the notes I’ll share here. As you can see, they’re lengthy. Do visit the full notes if you wish to read more.

Interesting Content

Square Peg Partner Piruze Sabuncu shared this read with our team and said:

Nice read. A new paper just dropped - I think we will hear Jagged Frontier more often!

"AI is weird. No one actually knows the full range of capabilities of the most advanced Large Language Models, like GPT-4. No one really knows the best ways to use them, or the conditions under which they fail. There is no instruction manual. On some tasks AI is immensely powerful, and on others it fails completely or subtly. And, unless you use AI a lot, you won’t know which is which.

The result is what we call the “Jagged Frontier” of AI. Imagine a fortress wall, with some towers and battlements jutting out into the countryside, while others fold back towards the center of the castle. That wall is the capability of AI, and the further from the center, the harder the task. Everything inside the wall can be done by the AI, everything outside is hard for the AI to do. The problem is that the wall is invisible, so some tasks that might logically seem to be the same distance away from the center, and therefore equally difficult – say, writing a sonnet and an exactly 50 word poem – are actually on different sides of the wall. The AI is great at the sonnet, but, because of how it conceptualizes the world in tokens, rather than words, it consistently produces poems of more or less than 50 words. Similarly, some unexpected tasks (like idea generation) are easy for AIs while other tasks that seem to be easy for machines to do (like basic math) are challenges for LLMs.”

Piruze also shared a piece by A16Z and wrote:

Good weekend read. Here are the top 6 takeaways:

Most leading products are built from the “ground up” around generative AI

ChatGPT has a massive lead, for now…

LLM assistants (like ChatGPT) are dominant, but companionship and creative tools are on the rise

Early “winners” have emerged, but most product categories are up for grabs

Acquisition for top products is entirely organic—and consumers are willing to pay!

Mobile apps are still emerging as a GenAI platform

Square Peg Partner Philippe Schwartz shared this blog post by Square Peg portfolio company Deci, describing the architecture for their new LLM, DeciLM, that is 15x faster than Llama 2. Congrats to this team; they have been shipping products and features seemingly as fast as their models!

Ever the Stratechery fan, I enjoyed this piece called NVIDIA On the Mountaintop in which Ben spoke about the historical parallels (and dissimilarities) between Cisco and NVIDIA.

That’s all, thanks so much for reading!

Casey

I love using Midjourney to create an image for each post. This week’s is of course a reference to PKD’s most (?) famous novel, which I reference in the full notes linked above.